Being Rugged: How not to fail when things break.

A real-life tale of how Testing in Production has your back if you build safe enough failure states

Testing in production doesn't come for free. It takes intent and investment to build something safe enough to fail.

Building in ruggedness and observability builds you a safer space to ship fast and often. Here's a real-life demonstration showing the value of that investment.

We join a team that manages User Access

Users were getting stuff done on the site they helped manage. Engineers were building and shipping.

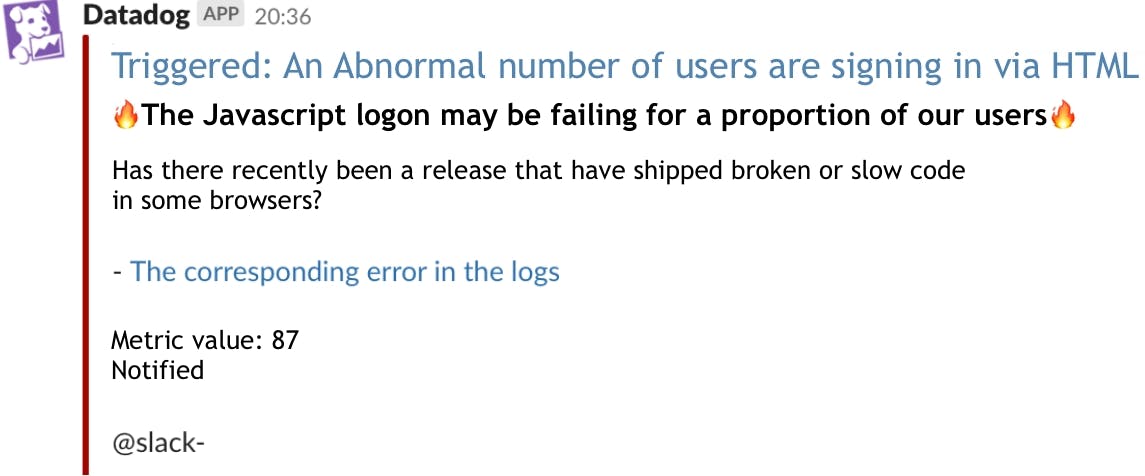

The team that looks after User Log-in received an alert in their chat room.

Something was up with the Authentication service. A fault on the log-in page.

Question one: Who's affected?

The first question to ask in the event of a live incident is: 'How is this issue affecting our users?'

Every issue has a different impact. The hope is, that with luck, the damage to users will be small. The good news was that in this case, it wasn’t was not big. They were lucky.

That Luck was self-generated. They'd built it into their systems.

How did they build this luck?

Engineers had built resilience into these systems. When a mistake was coded and shipped, the system failed well for users.

Authentication systems (like log-in) protect products. Not only does it need to be consistent in keeping people out, it also needs to be consistent and available in letting people in.

Building web frontends can be tricky. Correctly supporting every combination of every browser is an almost impossible task. Rather than expecting every change to be 100% correct for 100% of users, these engineers had bet on occasional mistakes making it to their users and built the system accordingly.

The log-in page was built to degrade gracefully. If the Javascript failed to run, the plain HTML page would continue to function.

If it doesn't break... how do you know it's broken?

The risk of having a system that handles failure well is that coding mistakes can go unseen and grow. You can end up with a bundle of bad code. A great way around this was to ensure systems will tell us when they fail, even when users couldn't see it.

The system emitted different data depending on how the user logged in. This allowed monitoring systems to keep an eye on the level of degradation. If it saw a large rise, it would let the team know, leading to this story's alert.

Jumping back to the key question: 'who's affected?'

In this incident, users were still being able to log in and access the products, so it was not a 🔥. The engineering team were the most affected - they still needed to investigate and fix. And that's the second key question I ask.

Question 2: What's the cause (and what’s the fix?)

Once the impact has been understood and control has been taken, focus can be placed on finding the cause.

The team had instrumentation output - logs and metrics. From this, they could see that the start of the issue coincided with a software release.

As the team practiced small and frequent software releases, it was a simple step to go from break ➜ release ➜ code change. It may not yet have been clear what was causing the problem, but there was a small changeset that was the likely cause.

Observing the situation

Knowing the location of the problem was not enough quite to solve it. The team needed to observe what was going on in their services and in their users' browsers to understand the problem. Detailed data about the users and operations happening on the system.

Browser fingerprints were part of the record for every successful log-in. Filtering for just the degraded logons quickly revealed a pattern in the user agent. From that, it was a small step to reproduce the broken state on the right browser and get a fix.

Build your own luck to move fast

You can build your own luck by building in ruggedness and observability. These features allow you to accept a varying level of quality without high impact and to quickly solve problems. Allowing your system to fail well.

Use these and you can keep fixing your problems as you go, shipping in small batches, which makes fault location easy.

More importantly, in combination, these support your teams to move and ship fast, getting new features to your users

Credits

Ice Cream Photo by Sarah Kilian

Design Photo by Brett Jordan